Digital Education recently attended the Plymouth Medical School Personalised Assessment Conference to showcase some of the innovative work being undertaken within PULSE to aid in the investigation of Content Adaptive Progress Testing for the BMBS (Bachelor or Medicine, Bachelor of Surgery) programme.

The first half of the conference focused on the theory behind content adaptive testing (Prof Steven Burr), how adaptive tests would be undertaken by students in QuizOne (Prof Jose Pego), and what students could expect when viewing and analysing their results (Robin Bailey and Nathan Marshall). The second half looked at student perceptions of adaptive testing (Paul Millin), how adaptive testing is being used in national formative assessments in Wales (Ben Smith), alongside other assessment-related topics.

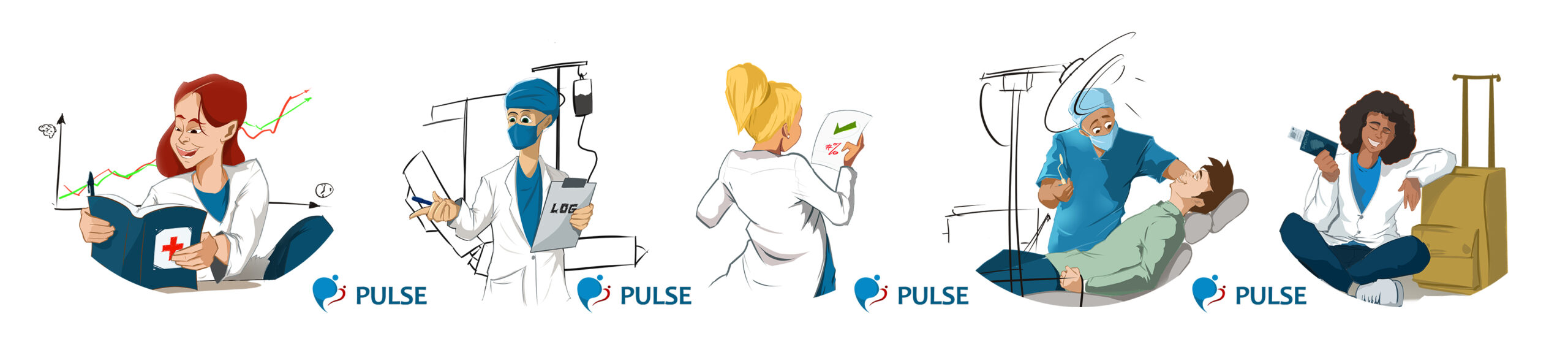

What is PULSE?

PULSE is a system featuring a collection of bespoke functionalities built on the university’s Salesforce implementation encompassing the entire student journey. It is used by both staff and students within the Peninsula Medical School and Peninsula Dental School.

One of the key areas contained is the distribution and analysis of progress testing across the entire programme duration for both students and their tutors.

Why swap to content adaptive progress testing?

With the introduction of the Medical Licencing Assessment (MLA) by the General Medical Council (GMC), students undertaking the Bachelor of Medicine, Bachelor of Surgery (BMBS) undergraduate degree will need to complete the MLA examination before graduating, testing core knowledge, skills, and behaviours of new doctors.

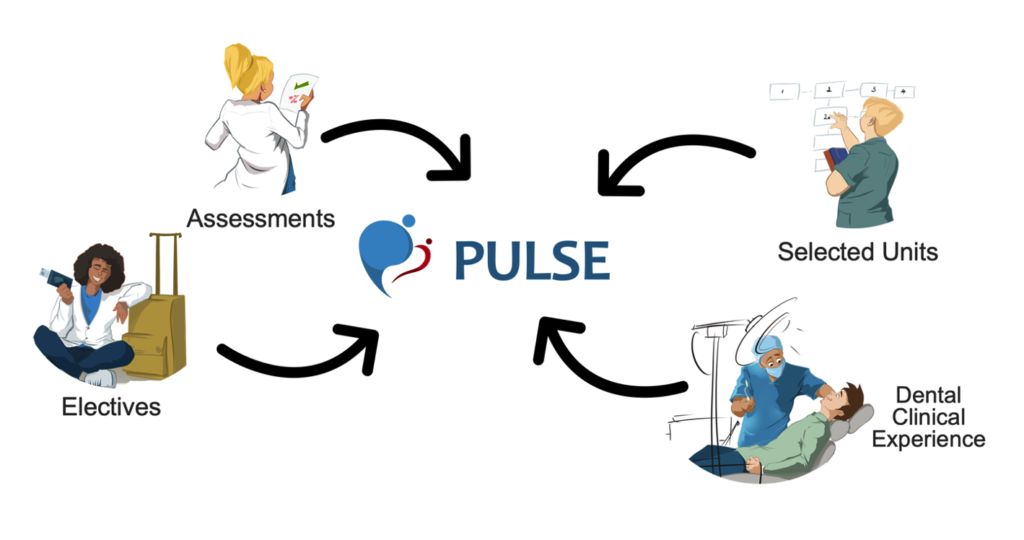

This allows for a novel approach on adaptive progress testing to prepare students by selecting questions across each examination based on students prior performance. However, this will be based on the MLA topics (content) vs. difficulty-based selection criteria. The aim to ensure all students have been able to both encounter and successfully answer each topic at least once before sitting the final Y5 MLA examination, focusing outcomes on holistic coverage, and not enabling the possibility of compensation by norm referenced grade boundaries.

What changes are required?

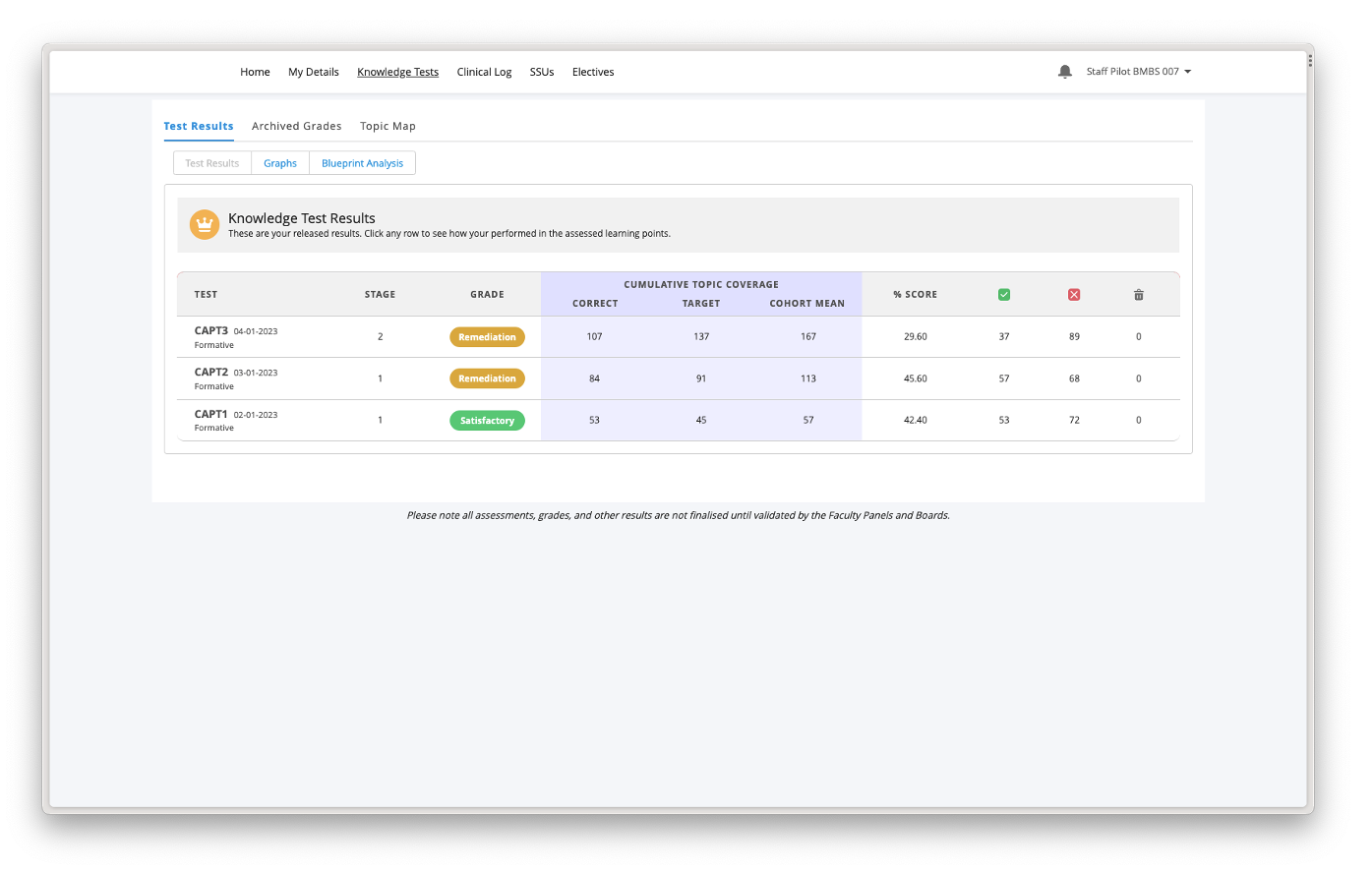

As assessment results are released and made visible in PULSE, implementing adaptive testing will require a series of developments to ensure this new wealth of data is not just available but actionable for the learner. This also makes a significant first step to an advanced form of personalised learning tailored to individual students’ needs.

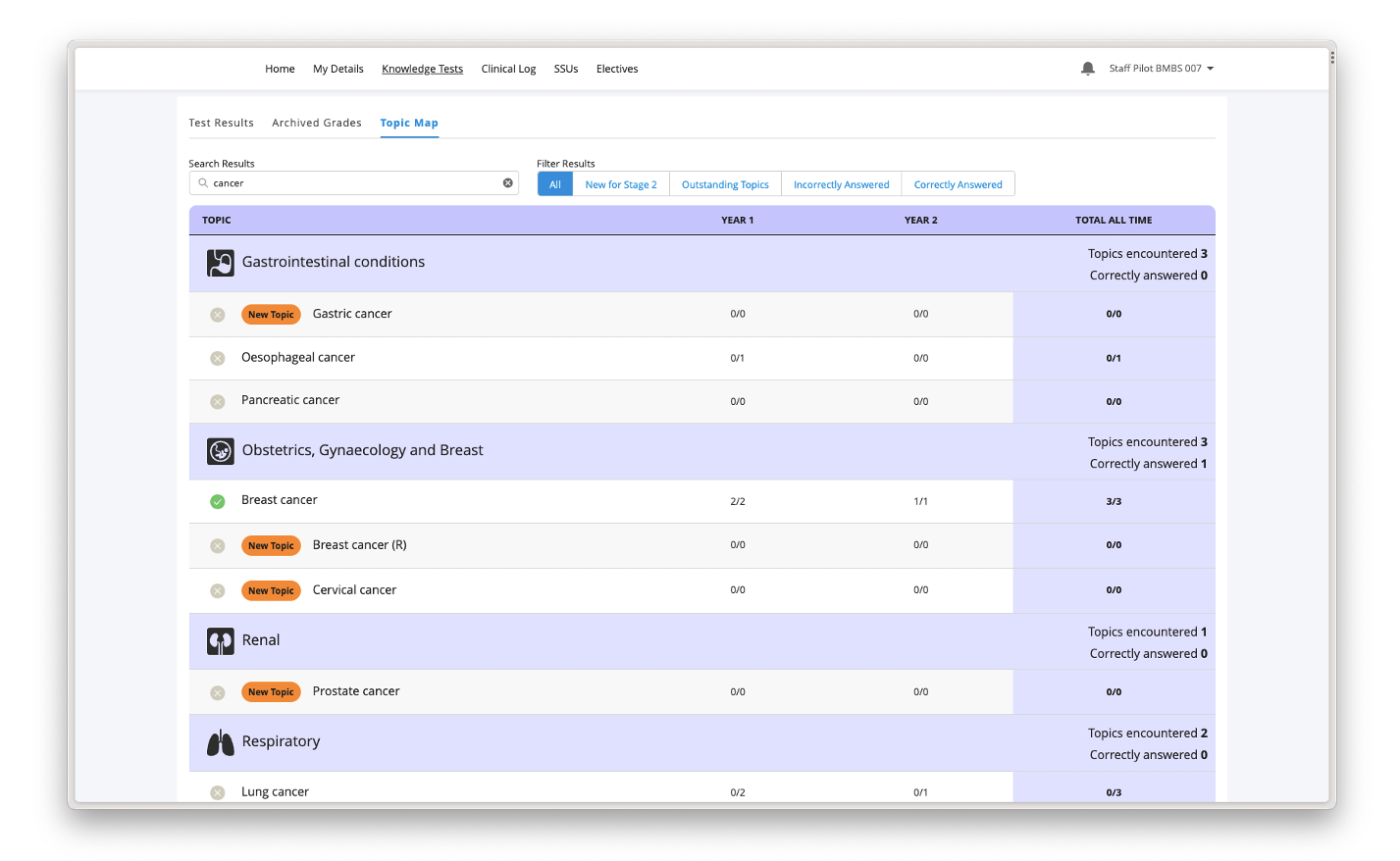

Significant changes were made to the technical underpinnings, ranging from data relationships to visualisations. In standard progress testing the concept of ‘Topics’ simply does not exist. Now we have implemented the GMC’s topic map within PULSE, allowing us to link each question within a test to a topic, tracking where students have encountered them and their outcomes at question-level granularity.

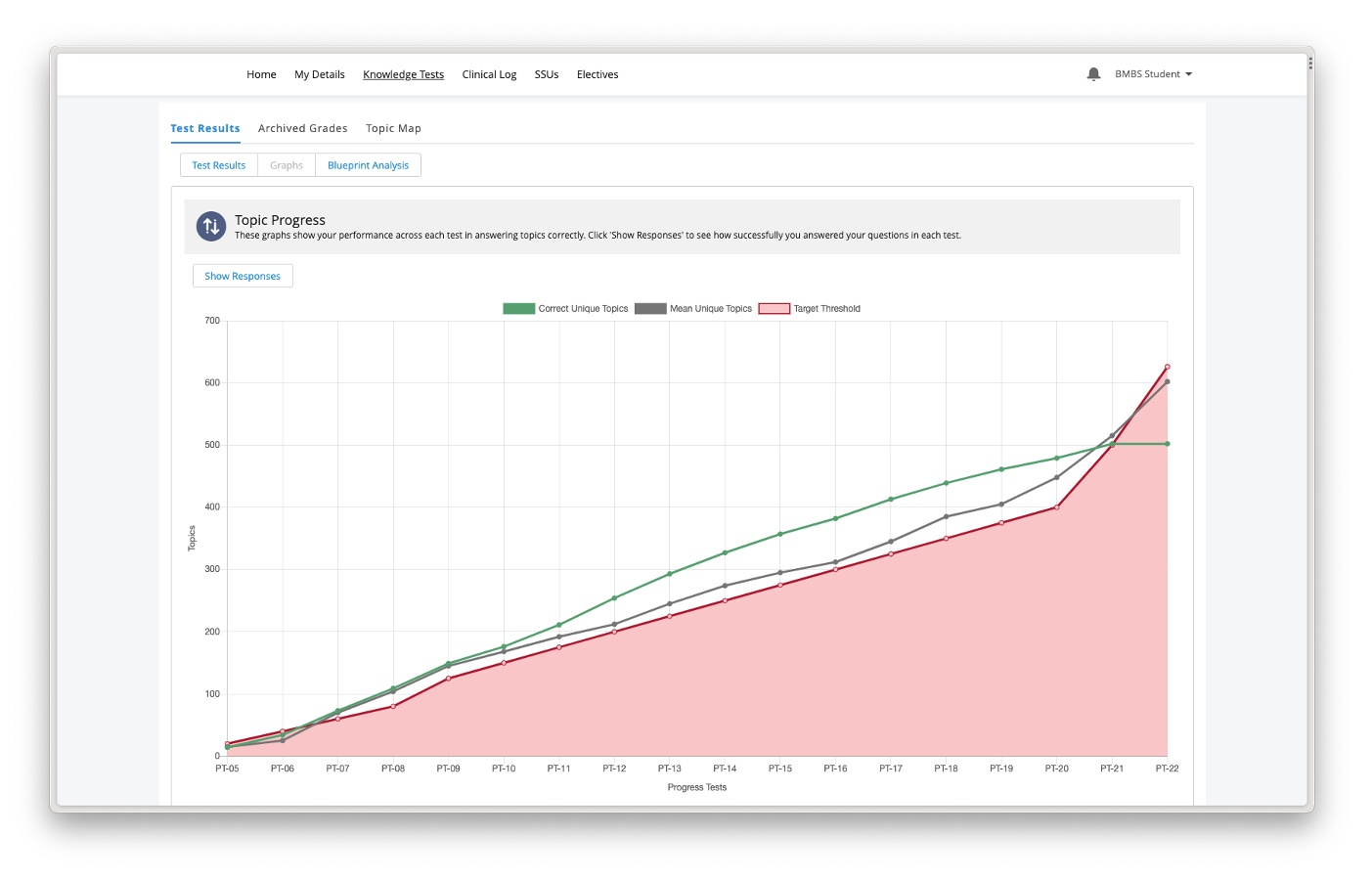

To visualise this, we have built two key components: A Progress Graph focussed on student achievement related to programme progression and a Topic Map for targeted learning to focus effort and insight.

Opportunities

Should content adaptive testing be adopted, and these changes rolled out to students in the new academic year, a number of opportunities begin to emerge for both staff and students.

One example for staff is the new ability to perform full cohort analysis based on topics being answered correctly or incorrectly. This would then offer the ability to infer topic success by programme stage and consider if additional teaching or resources are required to help students perform in these areas.

In the future, with the implementation of the MLA Topic Map this could be further expanded and linked to more areas of the student journey to give a complete view of contact with clinical areas and create an almost ‘self-generating learning plan’.

Machine Learning and the future

Enabled by this groundwork, new opportunities to leverage personalised and predictive support for students could be attained through machine learning (ML) and artificial intelligence. We explored how a traditional approach would involve capturing data, followed by an exploration to review the output and create rules for learners.

With structured legacy data, we could now apply ML models to explore datasets and find trends rather than conceive them ourselves, unencumbered by bias or lack of ability to see complex and underlying patterns. Going forward, we have the ability, if we choose, to generate probabilistic predictions based on past performance, across data areas, and an entire cohort of prior students to guide extra support.

In conclusion…

Adaptive testing is being piloted now and is planned to be rolled out in the next academic year.

Please note all data in this blog post is fabricated for the purposes of demonstration.

This is the first of a series of blog posts that will give you an in-depth look at all the features and functionalities within PULSE. Stay tuned over the coming months.